Today, virtually every aspect of our lives touches some part of an online network. While this has certainly improved many areas of life itself, such as how we walk around with handheld devices that can deliver us information at any time, it also poses certain risks.

These risks go beyond traditional hacking and data breaches into our bank accounts, for example. More so what I’m referring to here is that there are so many parts of our lives today that are impacted by algorithms used by artificial intelligence (AI). We assume this AI inherently leverages algorithms that are in our best interests. However, what happens when the wrong type of bias enters these algorithms? How may that affect certain outcomes?

What happens when biased algorithms infiltrate AI systems?

To offer another example, on YouTube, an AI algorithm recommends nearly 70% of all videos, and on social media platforms like Instagram and TikTok, the percentage is even higher. Although these AI algorithms can assist users in finding content that they are interested in, they raise serious privacy issues, and there is mounting evidence that some of the recommended content people consume online is even dangerous due to misinformation or perhaps contains a certain perspective that is designed to subliminally sway a person’s political thinking or beliefs.

The creation of a well-rounded, adaptable AI is a challenging technical and social endeavor, but one of the utmost significance.

It is understandable how AI could have a negative impact on societal norms and online usage patterns while also focusing on the technology’s positive effects. Online sources have a significant influence on our society, and biases in online algorithms will unintentionally foster injustice, shape people’s beliefs, spread false information, and foster conflict among various groups.

This is where “bad AI” can have truly significant consequences as it relates to unwanted and/or unfair biases.

How biased AI can adversely affect traffic intersections

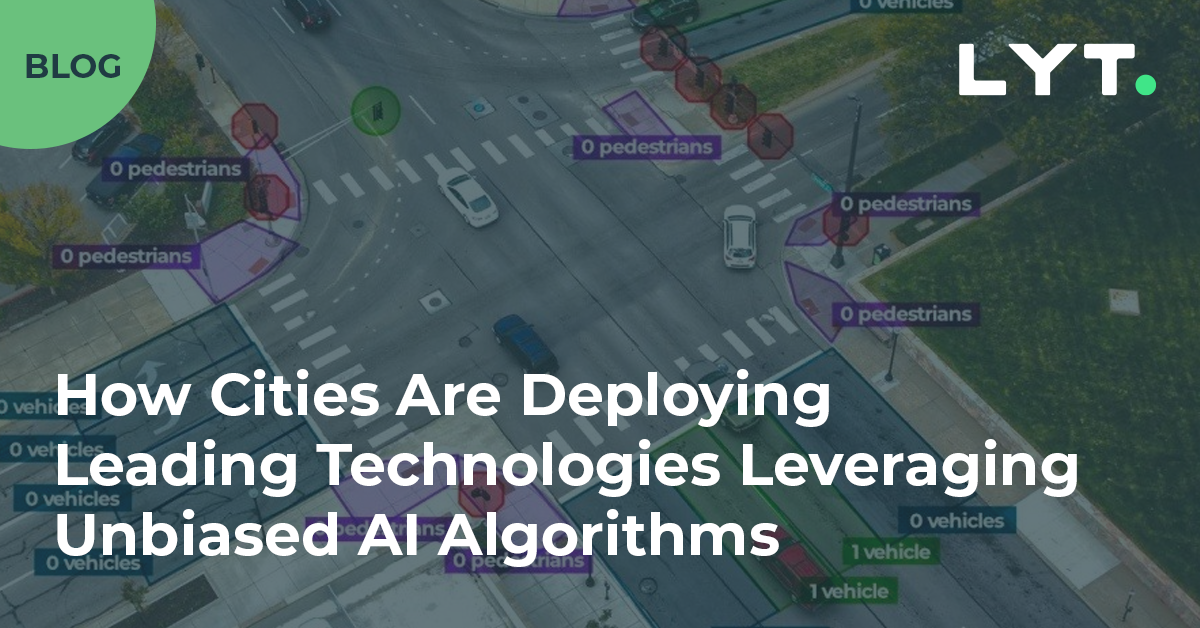

Take traffic intersections, as a more real-world example. Long wait times at traffic lights are becoming a thing of the past thanks to new AI technologies being deployed in markets around the country. These Transit Priority solutions leverage real-time traffic data and adapts the lights to compensate to changing traffic patterns, keeping the traffic flowing and reducing congestion.

The systems use deep learning, where a program understands when it is not doing well and tries a different course of action – or continues to improve when it makes progress.

Sounds like a great idea, right? What happens if, over time, the AI algorithms embedded in the traffic sensor technology begin to prioritize more expensive vehicles over others, based on biased algorithms that are designed to recognize that people who drive a certain type of vehicle deserve priorities over others?

This is where “bad AI” could adversely affect a very important part of our lives.

Let’s take for example these AI-powered transit priority systems are part of a larger Intelligent Transportation System (ITS) that leverages the power of connected vehicle technologies. ITS systems are only as good as the agnostic cloud-based data-sharing platforms they operate on, and not all are created equally.

Eliminating bias in AI algorithms

These data-sharing platforms have been proven highly effective, but only when cities and municipalities overseeing transportation systems make them open for proper data sharing where biased algorithms are not allowed to take part. Unfortunately, many municipalities remain locked into contracts with hardware and device providers who claim to operate under “open architecture” yet are unwilling to work under an open data platform, and these cities severely restrict themselves from the true possibilities that a cloud-based platform can provide.

Cloud-based transit prioritization systems take the global picture of a system into account and use unbiased data-centric machine learning to predict the optimal time to grant the green light to transit vehicles at just the right time. It minimizes interference with crisscrossing routes and simultaneously maximizes the probability of a continuous drive. More importantly, the agnostic cloud-based platform ensures cities leverage a continuously updated system for maximized transit potential, without bias from unwanted sources.

With this technology now readily available, cities, developers, and municipalities have the technology they need to properly accelerate the buildout of intelligent transit networks to benefit everyone in the region, fairly and equitably.

Regions like the City of San José are now leveraging the benefits of AI to improve the delivery of services to its residents. As the City increasingly uses AI tools, it is more important than ever to ensure that those AI systems are effective and trustworthy. By reviewing the algorithms used in its tools, the Digital Privacy Office (DPO) ensures that the City’s AI-powered technology acquisitions perform accurately, minimize bias, and are reliable. When a City department wishes to procure an AI tool, the DPO follows specific review processes to assess the benefits and risks of any AI system.

For this particular region, we are proud to join companies like Google as one of the few approved AI vendors to participate in city-wide technology deployments because of unbiased algorithms. As more AI technologies continue to be developed, it will be especially important to ensure that they are built without any unbiased algorithms for the benefit of a truly fair and equitable use of local municipal services.

Originally published here on August 2, 2023